[1] The streaming rollout of deep networks - towards fully model-parallel execution

Volker Fischer, Jan Köhler, Thomas Pfeil

Bosch Center for Artificial Intelligence

深层神经网络,尤其是循环网络,在控制与外界实时交互的自治体方面很有前景。然而,这需要将时序特征无缝地集成到网络结构中。针对循环神经网络,训练和推理时通常在时间上进行展开,但是展开的方法有多种。

一般在推理过程中,网络的每一层是以序列的形式进行计算的,这就会造成信息集成在时序上是稀疏的,而且响应时间较长。

这篇文章针对如何展开,提出了一种理论框架,不同展开方式对应的模型并行度会有所不同。有些特定形式的展开,可以得到更早的并且更频繁的响应,而且早些的响应通常会取得更好的效果。流式展开性能最好,这种展开能够使得网络完全并行化执行,进而利用大量并行设备缩短运行时间。

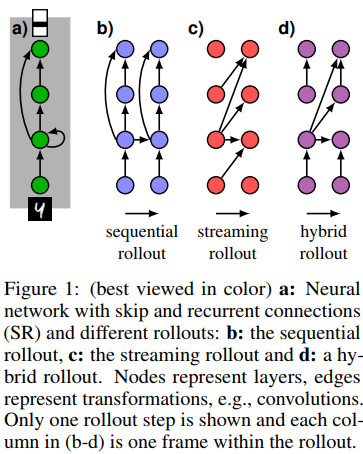

不同的展开方式对比如下

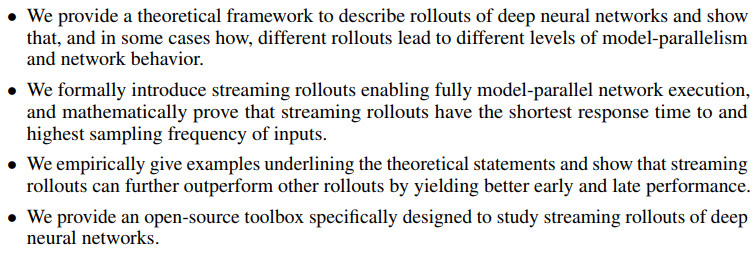

这篇文章的主要贡献可以归纳为

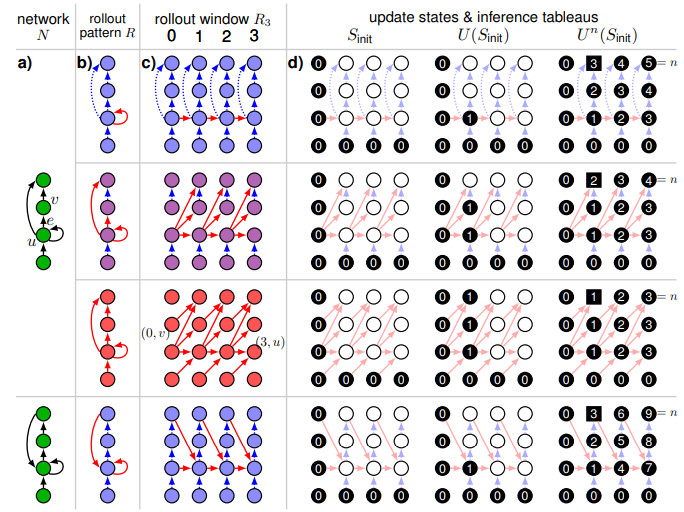

不同的展开方式及对应的更新和推理对比如下

代码地址

https://github.com/boschresearch/statestream

[2] Can We Gain More from Orthogonality Regularizations in Training Deep CNNs?

Nitin Bansal, Xiaohan Chen, Zhangyang Wang

Texas A&M University

这篇文章为了更好的训练深层卷积神经网络,提出了新颖的正交正则项,该正则项利用了多种高级分析工具,比如相干性和有限等距特性。这些插拔式正则项可以很方便地用于训练任何卷积神经网络。

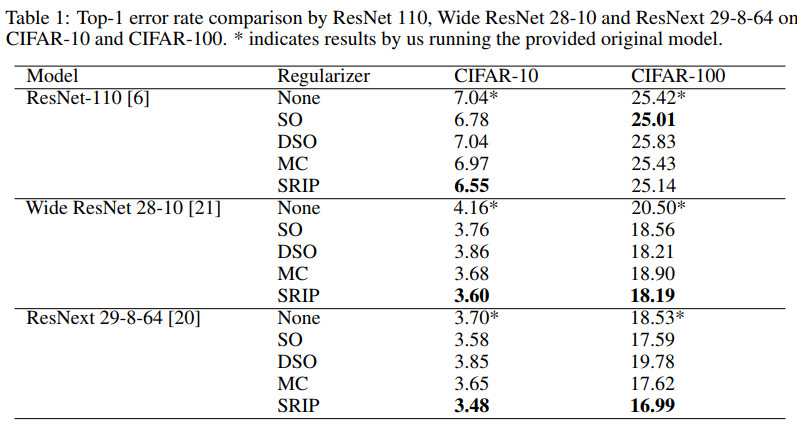

作者们将这些方法用于一些效果较好的经典模型,比如ResNet, WideResNet, ResNeXt等,并且在流行的计算机视觉数据集上进行了实验,比如CIFAR-10, CIFAR-100, SVHN以及ImageNet等。

这篇文章提出的正则化方法不仅能够使得模型训练速度更快,而且收敛地更稳定,还可以达到相当的准确率。

几种模型在两个数据集上利用不同正则方法的效果对比如下

其中ResNet-110对应的论文为

Deep residual learning for image recognition. CVPR 2016

代码地址

https://github.com/KaimingHe/deep-residual-networks

Wide ResNet 28-10 对应的论文为

Wide residual networks, BMVC 2016

代码地址

https://github.com/szagoruyko/wide-residual-networks

https://github.com/xternalz/WideResNet-pytorch

ResNext 29-8-64对应的论文为

Aggregated residual transformations for deep neural networks, CVPR 2017

代码地址

https://github.com/facebookresearch/ResNeXt

几种正则方法分别对应

SO: Soft Orthogonality

DSO: Double Soft Orthogonality

MC: Mutual Coherence

SRIP: Spectral Restricted Isometry Property

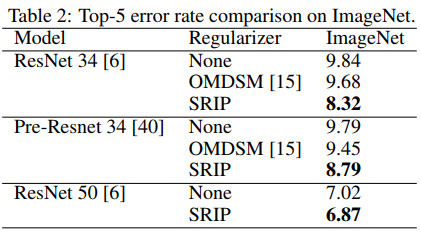

其中ResNet 34和ResNet 50对应的论文为

Deep residual learning for image recognition. CVPR 2016

代码地址

https://github.com/KaimingHe/deep-residual-networks

Pre-Resnet 34对应的论文为

Identity mappings in deep residual networks, ECCV 2016

代码地址

https://github.com/FlorianMuellerklein/Identity-Mapping-ResNet-Lasagne

OMDSM为Optimization over Multiple Dependent Stiefel Manifolds

对应的论文为

orthogonal weight normalization method for solving orthogonality constraints over Steifel manifold in deep neural networks, AAAI 2018

代码地址

https://github.com/huangleiBuaa/OthogonalWN

代码地址

https://github.com/nbansal90/Can-we-Gain-More-from-Orthogonality

[3] Recurrently Controlled Recurrent Networks

Yi Tay, Luu Anh Tuan, and Siu Cheung Hui

Nanyang Technological University, Institute for Infocomm Research

在循环神经网络中,长短时记忆网络以及门限循环单元在很多时序建模中比较常用,是比较经典的构建单元。

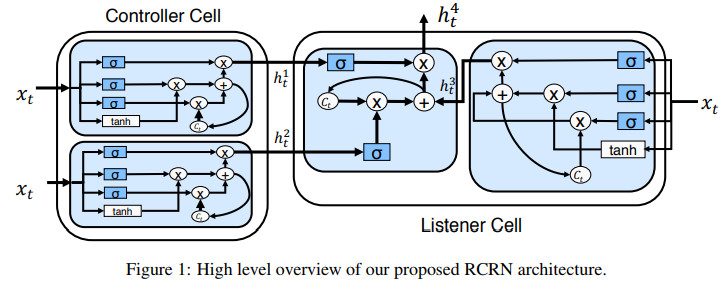

这篇文章提出一种循环控制循环网络,该网络能够得到表达力更强的序列编码。这种网络的核心思想是利用循环网络来学习循环门限函数。整体结构可以分成两个部分,一个控制单元和一个接收单元,其中循环控制单元主动影响接收单元的组合。

该方法可以用于情感分析,比如SST, IMDb,Amzon reviews这些数据集,问题分类,比如TREC数据集,蕴含分类,比如SNLI,SciTail数据集,答案选取问题,比如WikiQA, TrecQA数据集,以及阅读理解问题,比如NarrativeQA数据集。

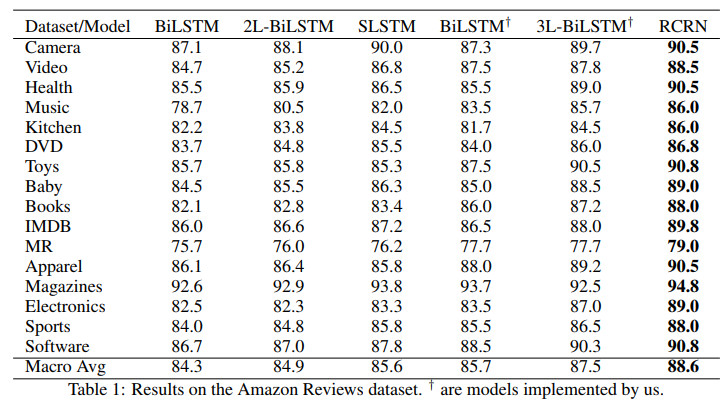

基于26个数据集的实验表明,本文所提出的RCRN不仅优于BiLSTMs,而且优于堆叠BiLSTMs。

在Amazon Review数据集上几种方法的效果对比如下

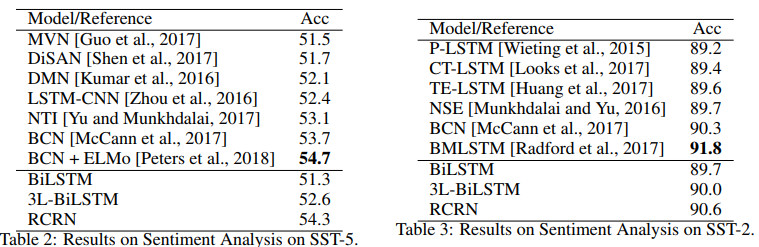

在情感分析数据集上集中方法的效果对比如下

其中MVN对应的论文为

End-to-end multi-view networks for text classification, 2017

DiSAN对应的论文为

Disan: Directional self-attention network for rnn/cnn-free language understanding, AAAI 2018

代码地址

https://github.com/taoshen58/DiSAN

DMN对应的论文为

Ask me anything: Dynamic memory networks for natural language processing,ICML 2016

代码地址

https://github.com/YerevaNN/Dynamic-memory-networks-in-Theano

https://github.com/DongjunLee/dmn-tensorflow

LSTM-CNN对应的论文为

Text classification improved by integrating bidirectional lstm with two-dimensional max pooling

NTI 对应的论文为

Neural tree indexers for text understanding

BCN对应的论文为

Learned in translation: Contextualized word vectors, NIPS 2017

BCN+ELMo对应的论文为

Deep contextualized word representations

P-LSTM 对应的论文为

Towards universal paraphrastic sentence embeddings, ICLR 2016

代码地址

https://github.com/jwieting/iclr2016

CT-LSTM对应的论文为

Deep learning with dynamic computation graphs

代码地址

https://github.com/tensorflow/fold

TE-LSTM对应的论文为

Encoding syntactic knowledge in neural networks for sentiment classification, TOIS 2017

NSE对应的论文为

Neural semantic encoders

代码地址

https://github.com/pdasigi/neural-semantic-encoders

BMLSTM对应的论文为

Learning to generate reviews and discovering sentiment, 2017

代码地址

https://github.com/openai/generating-reviews-discovering-sentiment

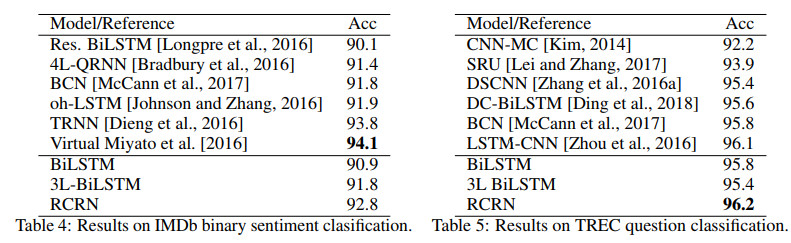

在数据集IMDb和TREC上的效果对比如下

其中Res. BiLSTM对应的论文为

A way out of the odyssey: Analyzing and combining recent insights for lstms

4L-QRNN对应的论文为

Quasi-recurrent neural networks

代码地址

https://github.com/salesforce/pytorch-qrnn

oh-LSTM对应的论文为

Supervised and semi-supervised text categorization using lstm for region embeddings

TRNN对应的论文为

Topicrnn: A recurrent neural network with long-range semantic dependency

代码地址

https://github.com/dangitstam/topic-rnn

virtual对应的论文为

Adversarial training methods for semi-supervised text classification

代码地址

https://github.com/aonotas/adversarial_text

CNN-MC对应的论文为

Convolutional neural networks for sentence classification,emnlp 2014

代码地址

https://github.com/yoonkim/CNN_sentence

SRU对应的论文为

Training rnns as fast as cnns

代码地址

https://github.com/taolei87/sru

DSCNN对应的论文为

Dependency sensitive convolutional neural networks for modeling sentences and documents

DC-BiLSTM对应的论文为

Densely connected bidirectional lstm with applications to sentence classification

代码地址

https://github.com/IsaacChanghau/Dense_BiLSTM

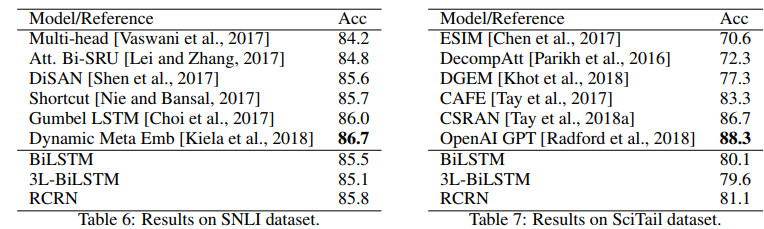

在数据集SNLI和SciTail上几种方法的效果对比如下

其中Multi-head对应的论文为

Attention is all you need,NIPS 2017

代码地址

https://github.com/jadore801120/attention-is-all-you-need-pytorch

https://github.com/Kyubyong/transformer

https://github.com/Lsdefine/attention-is-all-you-need-keras

shortcut对应的论文为

Shortcut-stacked sentence encoders for multi-domain inference

Gumbel LSTM对应的论文为

Unsupervised learning of task-specific tree structures with tree-lstms

Dynamic Meta Emb对应的论文为

Context-attentive embeddings for improved sentence representations

ESIM对应的论文为

Enhanced LSTM for natural language inference,ACL 2017

代码地址

https://github.com/lukecq1231/nli

DecompAtt对应的论文为

A decomposable attention model for natural language inference, EMNLP2016

代码地址

https://github.com/erickrf/multiffn-nli

DGEM对应的论文为

Scitail: A textual entailment dataset from science question answering,aaai2018

代码地址

https://github.com/allenai/scitail

CAFE对应的论文为

A compare-propagate architecture with alignment factorization for natural language inference

CSRAN对应的论文为

Co-stack residual affinity networks with multi-level attention refinement for matching text sequences,EMNLP 2018

代码地址

https://github.com/vanzytay/EMNLP2018_NLI

OpenAI GPT对应的论文为

Improving language understanding by generative pre-training

代码地址

https://github.com/openai/finetune-transformer-lm

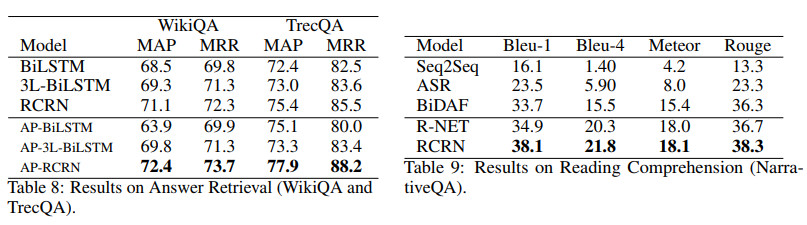

几种方法在答案检索和阅读理解中的效果对比如下

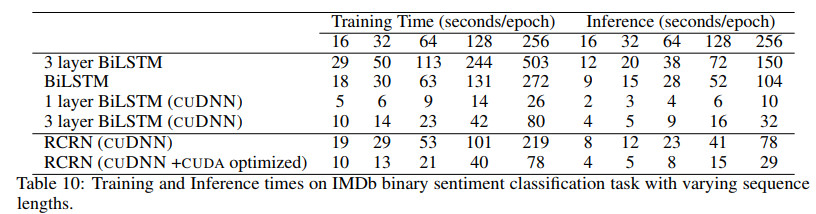

几种方法耗时对比如下

代码地址

文章评论